ChatGPT was Silicon Valley's worst mistake

How a gross misunderstanding created one of the largest bubble in History

The primal fear triggered by the rise of AI is like no other you’ve probably seen in your life. Forget immigration, religion, feminism or the Gaza Strip: you either love AI and ChatGPT governs your life; or you hate it and anyone involved with it. Divisive workplace policies, heated family debates or even getting flat out insulted and ridiculed on social media for even suggesting that there might be actual use cases for AI, this author had an easier time discussing past presidential elections than acknowledging the state of AI.

You might think that AI is useless, dangerous, that nobody wants this slop written by clankers and that this whole thing is a ridiculous bubble which will only burst into flames just like crypto and the metaverse did, only with much more real consequences due to the amounts involved. If that’s the case, you’re like Steve Ballmer predicting the iPhone will tank: a short-sighted dinosaur unable to see the comet coming to their doom.

Yet if you think that AI is the future, that developers already are experiencing huge performance boosts, GPT-5 is coming to replace most white-collar jobs and that AGI will resolve all of Humankind’s problems by 2030 then the question becomes: how much nutritional value do you think Sam Altman’s bullshit contains exactly? Are you so gullible to think he’s waving his superintelligence flag in every media outlet for another reason than hiding the fact OpenAI completely stopped progressing?

The illusion of intelligence

Now that everyone feels offended, it is time to explain what LLMs—the technology behind ChatGPT, Claude and others—really are. They are made to be translation systems. French to English. Long text to short text. Soft information into structured data. Functional specification to code. Those are the key capabilities of a LLM.

Systems like GPT-2 or BERT were already pretty impressive on their own. When GPT-3 was released, it was the first time that we started seeing AI companies saying “this model is so powerful we don’t want to release it to the public yet”. And sure enough, the capabilities were amazing. From general knowledge to translation it felt to specialists like this opened the door for countless applications.

Yet what did the trick was GPT-3.5, also known as ChatGPT. Because you see, foundation models are not trained to work in any particular way, they just “hold” a representation of the world and its translation into text. ChatGPT invented putting it into a chat format.

Suddenly, you get a chat application that can answer any question on any topic. It was indeed revolutionary. Because it really feels like you are talking with a human. At least, until you start digging. Re-hashing Wikipedia works just fine, but specific knowledge starts being thin and reasoning falls apart completely. Any subject matter expert deep-diving into content written by a LLM—even the today’s best ones—will tell you that it is absolute garbage.

As a matter of fact, chat is a terrible use-case for LLMs. As stated before, they are dumb, inanimate translation systems. They appear to be human-like when you inject billions of dollars in their training, but the illusion falls apart quickly when you start poking.

The Capacitor Effect: why LLMs peaked already

Proud owners of EVs—or anyone with a phone and some level of observation skill—will have noticed that the closer you get to 100% the slower the charge goes. In fact, you will be shocked to learn that both your car and your phone are lying to you: it is impossible to charge them to 100%. Imagine all little electrons going into a bar. At first the bar is empty so it’s easy to get in. But the more packed it goes, the longer it takes for little Timmy the electron to find a spot on which to stand. Up to a point where there is still some space left but you really need to wait for the crowd to get into a special position to form the space for just one more.

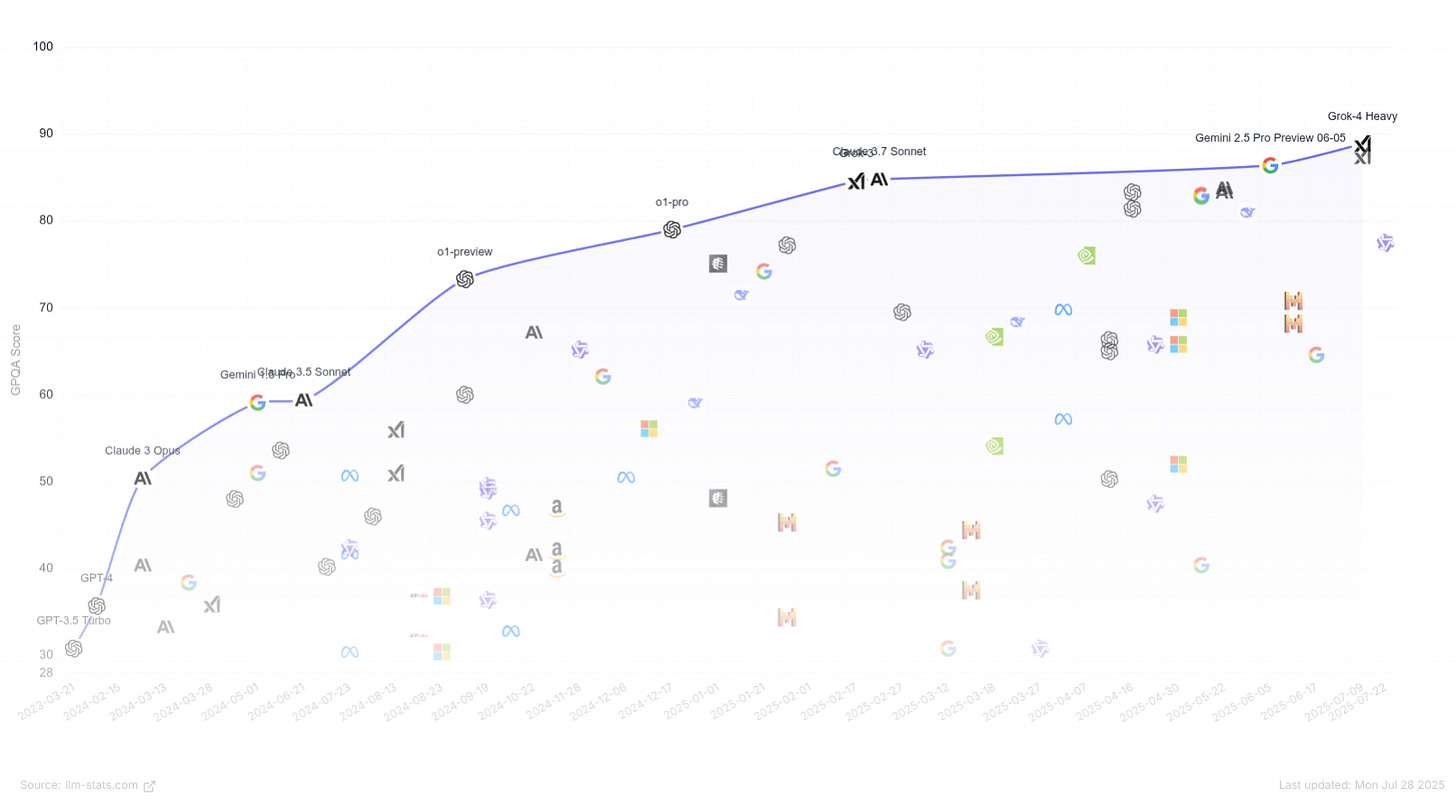

The same thing happens with LLMs and their benchmarks. At the beginning, we were having a huge room for improvement. But the more billions were poured into compute power the more the easy problems got solved while the more complex ones remained elusive. This can very easily be observed when you graph the performance of LLMs on benchmarks, the progression has slowed to a halt for the past year.

Everyone who went through university knows what this is about. Most improvements in performance that you see these days are actually due to the fine-tuning method. Specifically, they are trained directly on the benchmarks. It’s exactly like taking an exam and training on the specific question types that you’re going to get instead of developing a deep understanding of the matter.

The fact that LLMs reached their current level of capability can be seen as a miracle. Knowing how they work under the hood, there was absolutely no reason to think that spending $10 million on training GPT-3 was going to yield any result at all. And yet they are insufficient on their own to achieve any kind of human-like intelligence. So it can also be seen as a malediction: all that money spent on marginal gains while actual research could have been done.

For the better or for the worse, current models are however improving in one particular area: their cost. While heavily gaming benchmarks, obviously, latest models display a great level of usefulness at much lower cost1. Soon we can expect that LLMs running on our phones will have the highest level of useful capability that a LLM can have.

Sam Altman: not a clown, the whole billion-dollar circus

Our little brains, whose baseline reasoning capability is much closer to LLMs than we’d like to admit, tend to imagine that if from GPT-3 to GPT-4 we’ve seen such a huge boost then the increment that GPT-5 will deliver will shatter the foundations of human society and GPT-6 will be indistinguishable from God.

Obviously, this will not be the case. But little Sam has gained a lot of traction, now sitting at the highest tables within the US elites and received unfathomable amounts of funding—at least in theory. He promised to deliver AGI and now needs to keep people believing because as soon as they stop the whole thing is going to come crashing on his face.

So yes. You’ll see Sam Altman in every media outlet claiming that AI will wipe out entire job categories or that making an AI startup is akin to building a nuclear bomb. Honestly every single one of his public interventions are hilarious. Because as we’ve covered so far, nothing can be further from the truth for at least the next 10 years.

Yann LeCun says LLMs are not going any further and that we need more fundamental research to get closer to human-like intelligence. Transformers—the technology that made LLMs possible—got discovered in 2017 and took 5 years to pan out. They have been decades in the making. How long more do we need before another breakthrough?

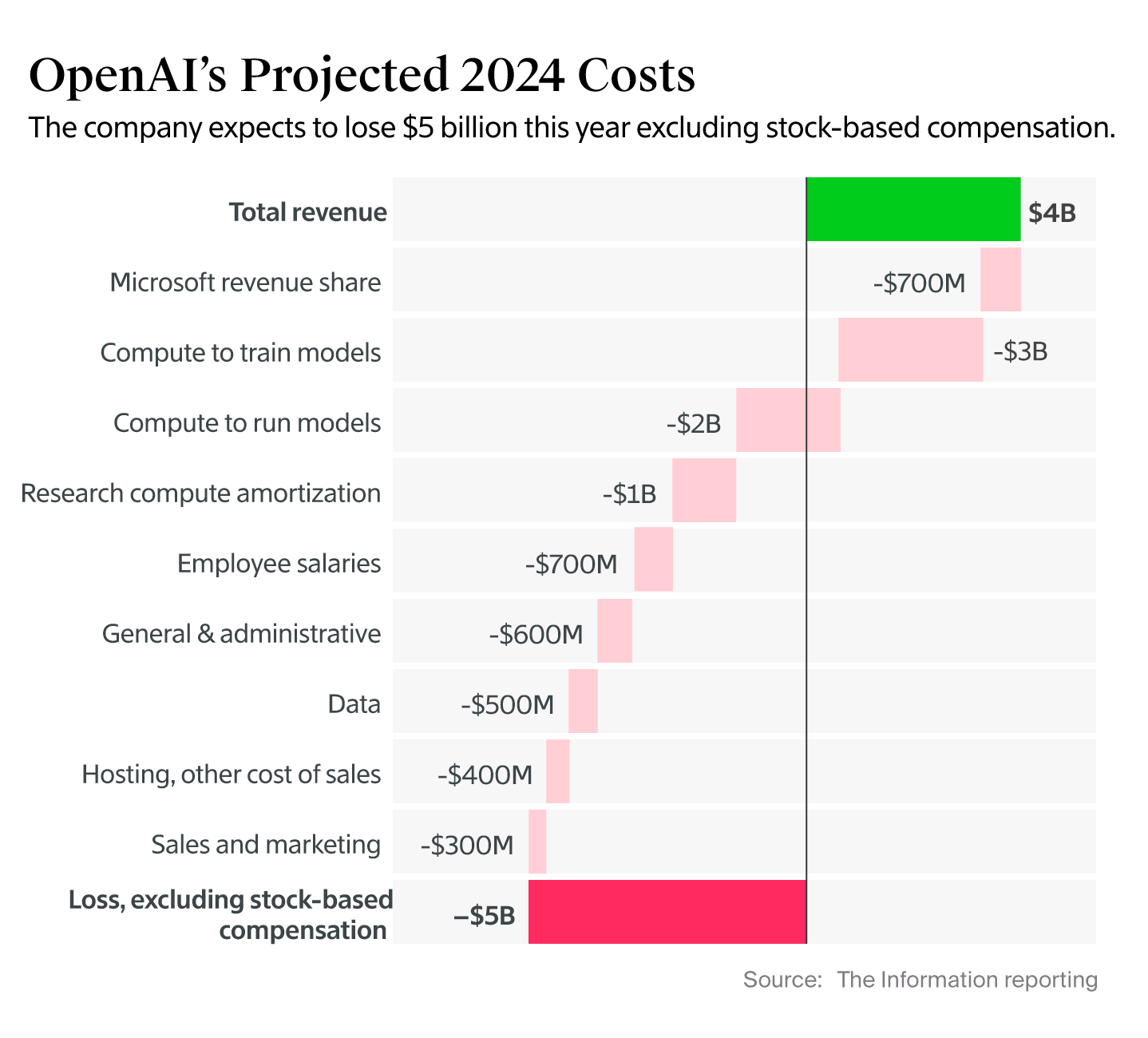

On the other hand, OpenAI’s costs are absolutely above the charts. And so are the costs of the rest of the industry. And so are the $500B promised for the project Stargate. That is a lot of money set to burn in order either scale something that maxed out or train a technology that doesn’t exist yet.

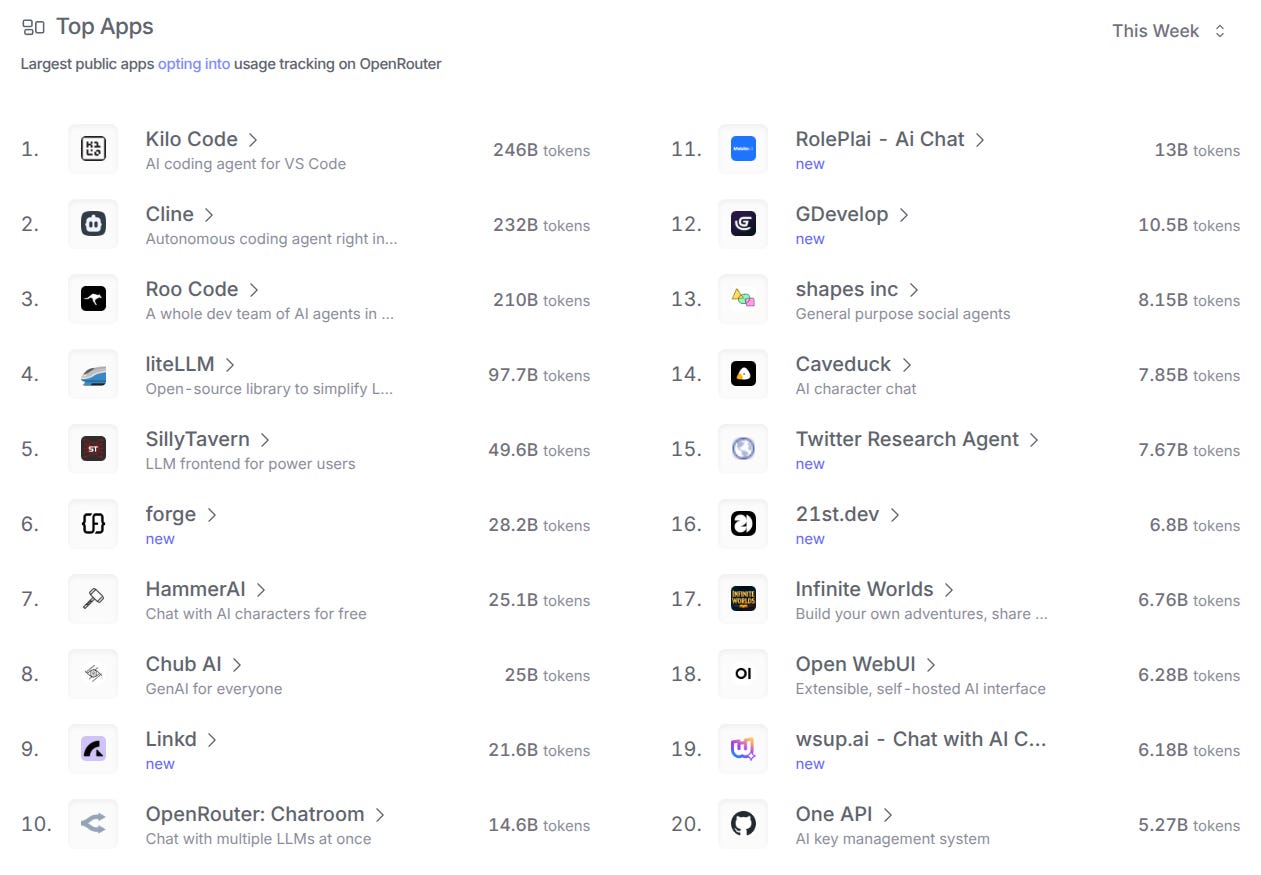

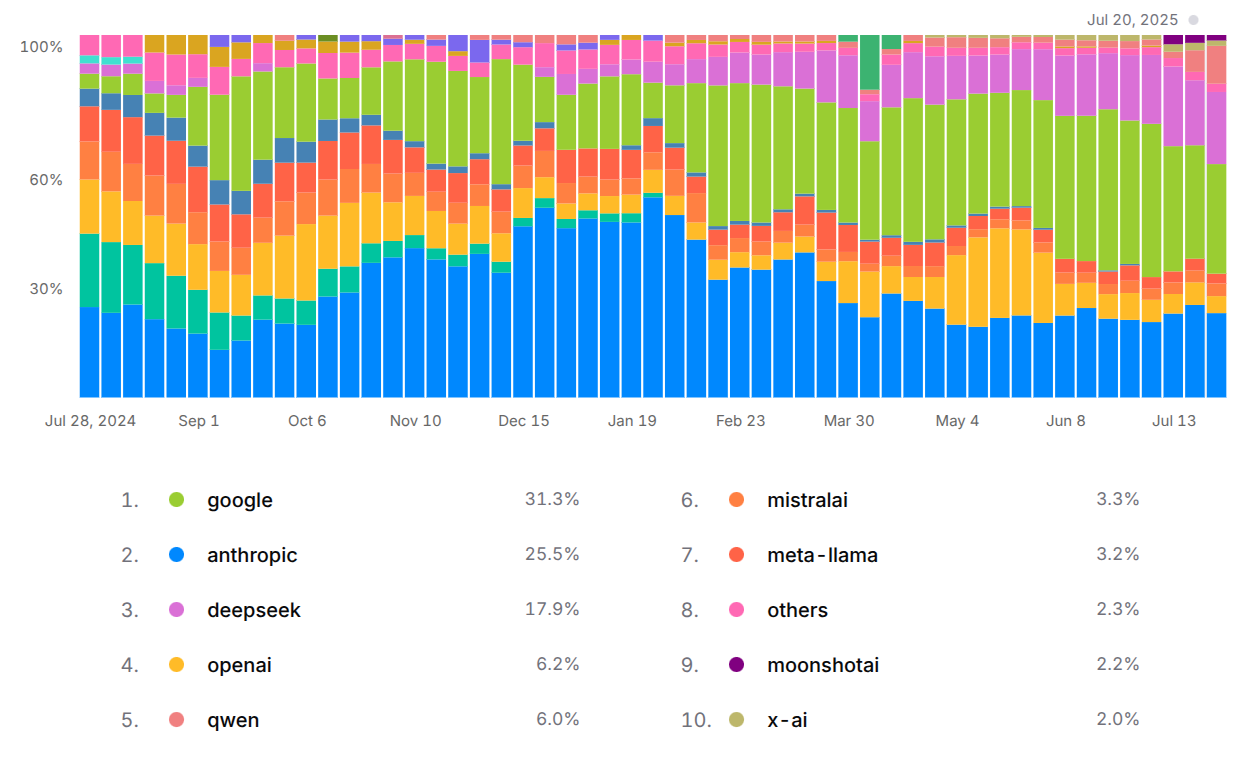

And that’s not all. There is an insane talent war going on where sign-in bonuses are rumoured to surpass $100M. In fact, most of OpenAI’s talents got poached by Anthropic, which probably explains why lately Claude has taken such a lead. In fact, if you look at OpenRouter’s data2, it appears that OpenAI is absolutely sidelined:

Last week, OpenAI had only 6.2% of the market shares. They are absolutely dwarfed by Google, Anthropic and DeepSeek that total ¾ of the market together. That’s how you understand the deception of Sam Altman: OpenAI, the poster boy for the whole industry, is barely relevant to business cases today. They could disappear tomorrow and it would have no impact.

The NVIDIA tax

Talking of talent war, NVIDIA is competing against itself, with its stock so high that their talents just quit out of being so rich. They are at the heart of why AI is so expensive, and beyond the ridiculous pay of top AI engineers most of everyone’s money just goes to them.

You see, AI is essentially a machine that computes every possible connection between every single one of the million words you can feed it at a time and decides which one is more likely to have meaning. This process is extremely compute-intensive and requires very powerful, dedicated hardware.

It is estimated that roughly half of this hardware is used to train models (feed them every single text ever written until they learn something) and the other half is used for inference (answer to actual queries).

The training process is very expensive, but also is done in several steps. The first and most expensive one is the creation of the foundation model. Presumably, given the plateau mentioned above, there will be no need anymore for such step fairly soon. Or at least not nearly as much as today.

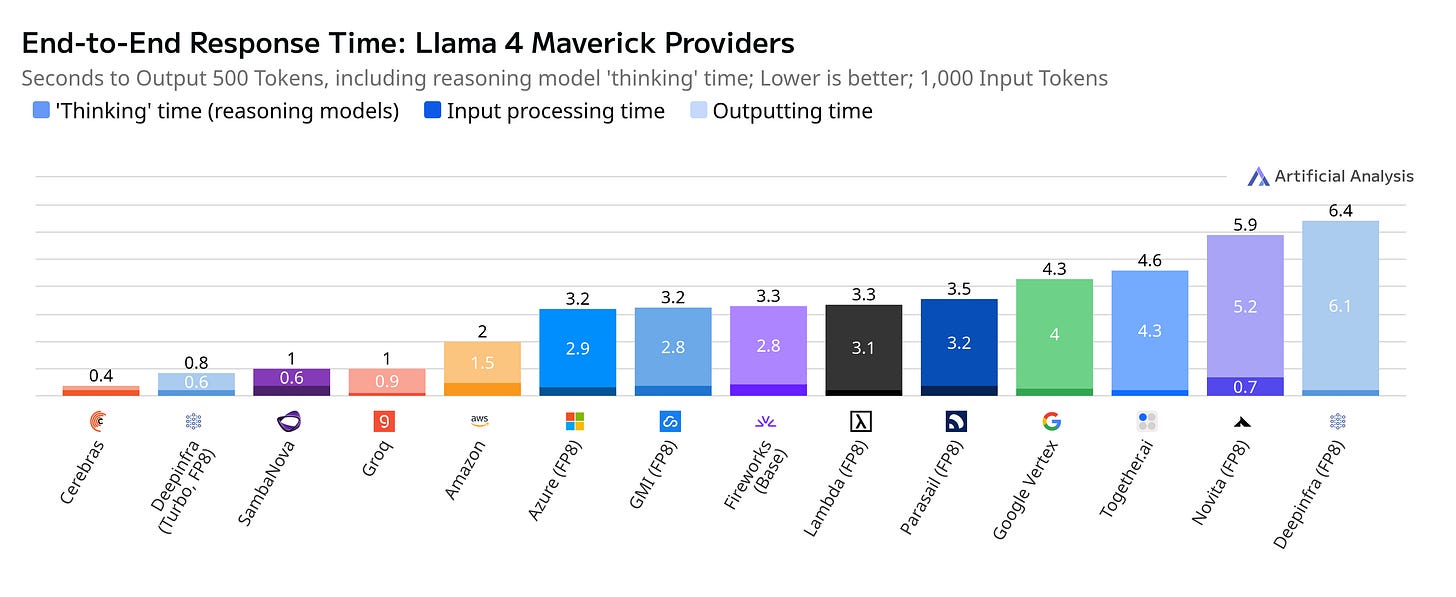

Then what about inference? GPUs are made for games and 3D. The actual thing that you need is a chip like Google’s TPU, Groq (with a “q”)’s LPU or Cerebras, which are much more efficient. To compare what is comparable, Cerebras is able to serve open-source models at ten times the speed of competition. Meaning that while huge leaps in LLMs are not going to happen just now, huge leaps in the hardware and efficiency are still going to arrive pretty fast.

And to add insult to the injury, today, a Bluetooth-connected backdoor toy has more computing power than all of NASA during the Apollo missions. The destiny of compute is and always has been on edge. There will be no exception here, AI will not be computed in datacenters. iPhones already come with AI models running locally, it’s only a matter of time before top-of-line models are running on every single consumer device.

To summarize, right now everyone is buying unholy quantities of GPUs from NVIDIA, but from those only half is actually used for business needs, and when dedicated chips are mass-produced you can slash that by a factor 103, and soon enough you won’t even need any of that because most of it will happen on consumer devices. If you think that your car’s value takes a hit when you take it out of the dealership, try buying a GPU in 2025.

Faster horses, faster humans

As said Albert Einstein, when asked what would be the future of transportation, a gentleman from the 18th century would certainly say they want faster horses. The same applies for every single technology. Just have a look at futuristic 80s movies, their vision of today’s technology is absolutely ridiculous. Cars would be flying but you would still be getting your taxi at a stand?

Now AI has human-like qualities, are they going to overtake white-collar jobs? Pinocchio says AI will replace normal people, but is already diluting his wine between 2023 and 2025 where the tone switched from “some jobs will be 50% AI” to “hey look we found some jobs where AI helps a bit”.

The jobs to be replaced according to the 2023 paper were:

Interpreters and Translators

Survey Researchers

Poets, Lyricists and Creative Writers

Public Relations Specialists

Writers and Authors

Tax Preparers

Web and Digital Interface Designers

Mathematicians

Blockchain Engineers (is that even a thing?)

Court Reporters and Captioners

Proofreaders and Copy Markers

Correspondence Clerks

Accountants and Auditors

News Analyst, Reporters, Journalists

Legal Secretaries

We are two years later. Did anything happen to these jobs?

LLMs being a translation tool, a third of translators are losing their job to AI, alongside with a quarter of illustrators. Working for a content website doesn’t seem to be a very safe career either, although this might have less to do with AI and more to do with the general context of content proliferation. There could be one or two other items in the list truly affected, but other than that it seems to be business as usual.

Is there any use for AI beyond translation then? We can once again use OpenRouter to understand what the majority of AI services are being used for.

Code assistance tools

“Role-playing” models (read: AI girlfriends and sex bots)

As it turns out, Melon Tusk got officially crowned the Incel King for breaking the Japanese Internet with an AI girlfriend. Host(ess) clubs are a real thing, in particular in Japan, and so are “dating sim” or even Maid Cafés. The need for companionship is extremely strong for some parts of the population, but solutions to that problem require either workers undergoing tremendous sacrifices or extremely shallow solutions. AI on the other hand delivers a fantastic blend—whether you like it or not—of being personalized, patient, conciliatory, educated, always available, always willing and all that for a very decent price.

Whatever is the explanation for humans still doing essentially most tasks—whether it’s insufficient quality from LLMs or lack of liability—the fact remains that AI ended up shining first in a field where humans do not. You need to expect seeing that happening countless amounts of times in the future: AI is simply good at different tasks than humans4.

Not a bit of productivity found

The big flaw in this line of argumentation comes from coding tools, which appear to occupy the vast majority of consumed tokens, with companies like Cursor or Windsurf flaunting billion-dollar valuations. But do they actually make developers more productive?

Productivity measuring tools tried to answer just that. In a study published by Uplevel, they couldn’t find any evidence of changes in productivity for developers after they started using GitHub Copilot. Which is consistent with this author’s own findings: while you can experience the occasional slam dunk, AI will usually only act as a friendlier alternative to the highly pedantic StackOverflow. In other words, it is a great learning tool, but a poor employee.

Worse than that, if used incorrectly it can blow up exponentially. Increasing number of candidates are using it in their tests, ending up with code they hardly understand themselves, let alone are able to defend in an interview—often because it is so stupid that you cannot defend it. Developers start taking the eyes off the road and end up writing 5x too much code in 5x too much time. Vibe-coding is even suspected of being behind major data leaks (although probably not yet). Coding agents are actually 10x juniors: same skill level, 10x the potential for harm. This is going to hurt the development industry so bad.

What you need to understand is that a developer spends only a small amount of their time doing what AI can do: transforming a human-language specification into actual computer code. According to Microsoft, this represents about 44% of a developer’s time. Inside of that, roughly 50% of the time is spent reviewing your own work, making sure it fits the specifications. Now let’s say that AI boosts this by 30%. You’re down with:

That’s 7% of developer time saved by AI. When being overly optimistic. Weehee.

This might come as a surprise, but even the development industry which is hailed as the use-case for AI fails to show any kind of productivity gains for it. AI might be a great help, as a teacher for example, but if it brings any productivity at all the gains are so small that they cannot be measured.

DinoSquad to the rescue

As a CEO, even if your business is shampoo, you are going to feel an itch to make a bold claim about your company being AI-first in an attempt to stay relevant. At this point most big players fell into the trap, and we’ll use Salesforce to exemplify this.

Salesforce is a complex set of products. Essentially they have one “offer” for each bullet point that you could ever encounter in a board meeting, made accessible in the form of a huge license fee which may or may not give you access to some piece of technology that solves said problem. Behind that you have huge IT integrators, usually outsourced in Asia, which take months to move a paperclip with setup fees matching up the licenses.

We’re not here to understand how exactly they manage to convince their customers to spend so much money on something that should be a tenth of the price, but the fact is that this whole machine works because the very point of Salesforce is being complex, opaque and hard to use.

So when they say that they are going to have Agentforce, supposedly a generic and simple solution to be plugged on top of a platform whose core culture is custom integrations, it appears that the market doesn’t like it too much and the share value drops by 20% over 2025, without a single success story of Agentforce in sight (outside of the Salesforce website, obviously).

Essentially it’s what always happened. New technologies are not embraced by old players to increase their business efficiency by a marginal amount. Especially not when the industry has turned into a gridlocked field designed to prevent new entrants from coming in. The change always happens by making the industry irrelevant in a first place.

Which also translates in the workforce. The skills required today to be a Salesforce employee do not match up the skills required to run a successful AI business today. There is no training in existence that can prepare you for this, simply because no one knows yet what even are the job descriptions that you need to work on AI. Prompt engineer? Agent developer? No one knows!

At this point, the nascent AI industry—which is the one that builds on top of LLMs—still needs to take shape. This will happen through a darwinian process, where the people who made the right bets now will reap the rewards in 5 to 10 years.

Saturn’s revolution takes 10 years

There is no telling how much, by lack of tools, developers have influenced UX patterns—and even entire workflows—over the past decades, but you should consider that everything you know and use daily is up for grabs.

The Web was launched in 1989. By 2000 it caused a market crash. In 2004 was the launch of Gmail which introduced the “Web 2.0”. Essentially, that was the first application built for the Web realizing the full power of the platform. It took up another decade to build the frameworks and tools that allow the Web we know today to exist. Millenials have always known a world in which the Web exists and yet it took almost their whole lives to see the Industry even understand what you could do with it.

GenAI is not any different. Forget about ChatGPT being the fastest product reaching 1 million users. GPT-1 was created in 2018 and the ChatGPT boom was in 2022. It took almost 5 years to reach a million user, like for most products that haven’t found a market fit yet. It will take up to another decade before someone releases a full app maximizing what AI can do.

Have you ever wondered why so many content systems have tagging options? Why when you go on an e-commerce-type platform there are dozens of more-or-less usable filters? Do you think it’s because users thought that the best way to look for a product was to type the kind of product, get 50% of false positives on the keyword search, quantify every single parameter of the product and look for the ideal match?

That’s a wildly different experience than stepping into a store. Looking for a TV? The seller is gonna ask you your budget, the size of your living room and will start help you decide which gimmick feature you should sacrifice to get the best fit for your taste. Looking to rent a movie? Tell the clerk your mood, the movies you’ve seen and they’ll help you find a tailored match. Those experiences can only exist when you can understand human language.

Tomorrow you could see a book publishing platform on which you find the book by explaining the kind of experience you want reading it. It will give you a book that you pay per chapter, with a level of vocabulary and verbosity perfectly tuned for your taste. In the language that you speak, regardless of the language in which it was written. Because yes, it is still written by a human, the AI will only index, find and post-process it. That is one example.

GenAI will insert itself at every single level of every single application that will be produced. It will become seamless, as invisible than the air we breathe and equally indispensable. You will not even remember how you could possibly have been surviving before GenAI powered all this.

The question is not to understand if there is a market for GenAI to replace search, if coding assistants are selling enough subscriptions or if humans will lose their jobs. That’s totally irrelevant.

We are headed towards a world with an entirely new layer of value chain. Whole industries that did not previously have an equivalent. Applications that solve problems that were only bypassed so far. The possibilities are absolutely massive.

Net positive karma by 2030

Overall, AI has a pretty controversial image right now. A lot of the population is taken by this primal fear of the machine, rationalized into arguments focused on energy consumption and general resource usage.

First of all, let’s remember that those claims about extreme electricity needs are made mostly by the people selling AI right now. If we’ve learned anything so far in this article, is that the AI industry needs a lot of posing in order to make itself look bigger than it actually is and not lose the trust of investors.

In reality, the technology is getting cheaper by the day. And as explained above, it will run pretty soon on every single device. Which is a huge opportunity in terms of accessibility.

The reason why is pretty obvious. AI can see and hear like a human and transcribe one into the other or vice-versa. Blind people can get a permanent audiodescription and interact with every digital device through their voice. Deaf people get subtitles and other meta-information about their surroundings.

But it’s also even bigger than you would think. Disabilities that are not “serious” enough to warrant exceeding concern but that are annoying enough that if you solved them you would make millions happy… There are a lot.

Take for example Auditory Processing Disorder. You hear perfectly but you can’t understand what people say when they talk in a bar for example. That’s not gonna ruin your life, but that’s gonna be very fucking annoying in your 20s when you want to flirt in a nightclub. Or color blindness: sure you can see everything just fine, but when dressing up you have no way of knowing if you’re going to look like Sam Altman a clown. Or dyscalculia, or ADHD, or autism, or any other condition of that type.

So what if you could have glasses that see what you see, record what you hear and supplement your memory, translates social cues if you have autism, translate languages you don’t speak, keep a buffer of the current conversation if your ADHD drives you off for a minute, etc.

Put all those conditions together, you’re sure to rack up something like 60~70% of the population. That’s a huge amount of people that stand to benefit from an everyday bump from GenAI.

Getting jammed

At the end of the day, GenAI is like jam. You open the jar, start taking full spoons and eat it very fast, to your satisfaction. But very quickly, the jar empties and the spoons become less full. You have to start scraping the walls to find more jam. And with every spoon, surely enough, you always bring up some jam. It feels like jam is forever. But in the end you’re only getting a fraction of what is left, which quickly turns out to be nothing at all.

So instead of desperately trying to extract endless amounts of jam from a fixed-size jar, because that would be absolutely stupid, you should better enjoy it sparsely, spread it on some nice buttery toasts, just the right amount to get the perfect flavor balance. That’s how you are supposed to enjoy jam.

For example on OpenRouter we can see that one of the most used—thus most useful—models is Gemini 2.0 Flash, sold at $0.40 for 1 million tokens which is a lot cheaper than GPT-4o at $10 for a worse capability.

OpenRouter is the best proxy that I could find to measure the market share of models. They provide a unified model management for companies, allowing them to dynamically use the best model for their needs at a given time. Their customers are B-list startups, often not on Crunchbase, meaning that they most likely pay for their tokens with actual revenue from actual users. In a nutshell, this reflects the real business cases for AI. On the other hand, it’s absolutely biased towards this kind of clients.

I know it’s a syllogism and it’s a lot more complicated than that, but you see my point: technology is moving even faster than Moore’s law in the world of AI inference.

Yes I said that chat is a terrible use-case for LLMs, yet one of the main use case is indeed chat. But we’re talking about the kind of chat where apparences matter a lot more than facts or logic. This is not what you would expect from most business chat use-cases.